KDnuggets

※ Download: Activation functions in neural networks

We just lost the ability of stacking layers this way. Specifically in A-NN we do the sum of products of inputs X and their corresponding Weights W and apply a Activation function f x to it to get the output of that layer and feed it as an input to the next layer.

The main function of it is to introduce non-linear properties into the network. When a is not 0. The function is differentiable. Tanh Function :- The activation that works almost always better than sigmoid function is Tanh function also knows as Tangent Hyperbolic function.

Notation - However, the consistency of the benefit across tasks is presently unclear. Output Layer :- This layer bring up the information learned by the network to the outer world.

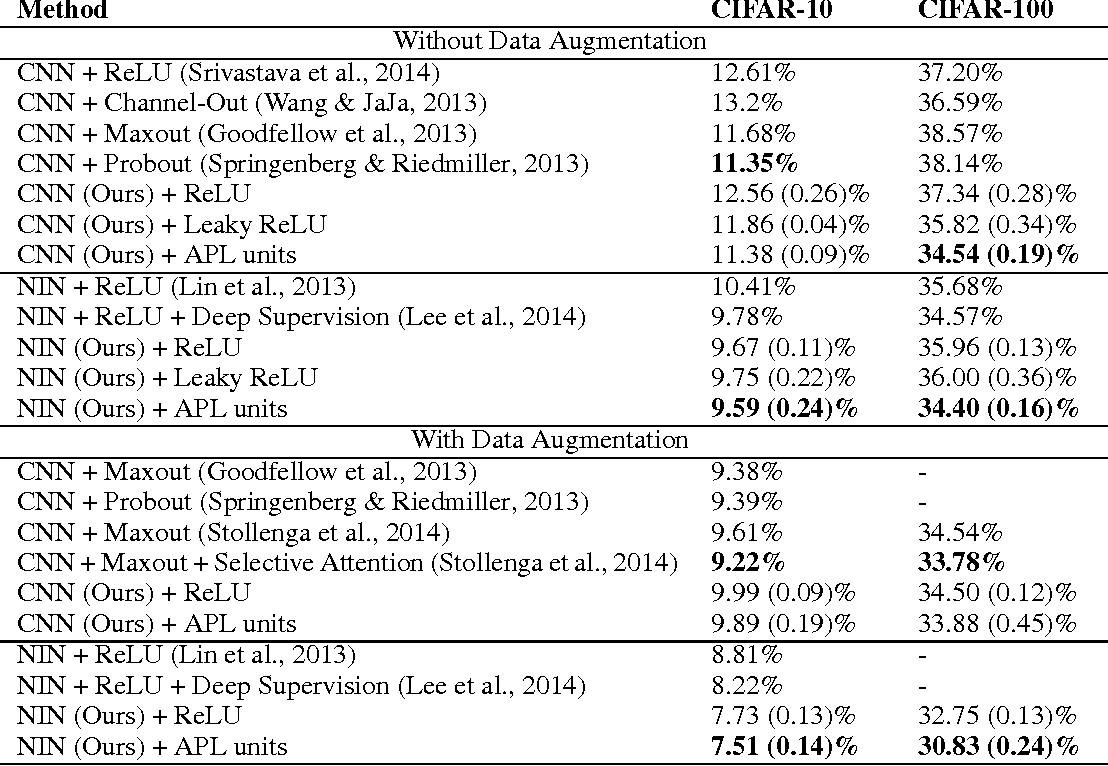

By definition, activation function is a function used to transform the activation level of a unit neuron into an output signal. An activation function serves as a threshold, alternatively called classification or a partition. It essentially divides the original space into typically two partitions. Activation functions are usually introduced as requiring to be a non-linear function, that is, the role of activation function is made neural networks non-linear. The purpose of an activation function in a Deep Learning context is to ensure that the representation in the input space is mapped to a different space in the output. In all cases a similarity function between the input and the weights are performed by a neural network. This can be an inner product, a correlation function or a convolution function. In all cases it is a measure of similarity between the learned weights and the input. This is then followed by a activation function that performs a threshold on the calculated similarity measure. In its most general sense, a neural network layer performs a projection that is followed by a selection. Both projection and selection are necessary for the dynamics learning. Without selection and only projection, a network will thus remain in the same space and be unable to create higher levels of abstraction between the layers. The projection operation may in fact be non-linear, but without the threshold function, there will be no mechanism to consolidate information. The selection operation is enforces information irreversibility, an necessary criteria for learning. There have been many kinds of activation functions over 640 different activation function proposals that have been proposed over the years. However, best practice confines the use to only a limited kind of activation functions. Here I summarize several common-used activation functions, like Sigmoid, Tanh, ReLU, Softmax and so forth, as well as their merits and drawbacks. In particular, large negative numbers become 0 and large positive numbers become 1. Moreover, the sigmoid function has a nice interpretation as the firing rate of a neuron: from not firing at all 0 to fully-saturated firing at an assumed maximum frequency 1. So, it is critically important to initialize the weights of sigmoid neurons to prevent saturation. For instance, if the initial weights are too large then most neurons would become saturated and the network will barely learn. This has implications on the dynamics during gradient descent, because if the data coming into a neuron is always positive e. This could introduce undesirable zig-zagging dynamics in the gradient updates for the weights. However, notice that once these gradients are added up across a batch of data the final update for the weights can have variable signs, somewhat mitigating this issue. Therefore, this is an inconvenience but it has less severe consequences compared to the saturated activation problem. Tanh Units The hyperbolic tangent tanh function used for hidden layer neuron output is an alternative to Sigmoid function. It squashes real-valued number to the range between -1 and 1, i. Like the Sigmoid units, its activations saturate, but its output is zero-centered means tanh solves the second drawback of Sigmoid. Therefore, in practice the tanh units is always preferred to the sigmoid units. In other words, the activation is simply thresholded at zero. It is argued that this is due to its linear, non-saturating form. For example, a large gradient flowing through a ReLU neuron could cause the weights to update in such a way that the neuron will never activate on any datapoint again. If this happens, then the gradient flowing through the unit will forever be zero from that point on. That is, the ReLU units can irreversibly die during training since they can get knocked off the data manifold. We can see that both the ReLU and Softplus are largely similar, except near 0 where the softplus is enticingly smooth and differentiable. In deep learning, computing the activation function and its derivative is as frequent as addition and subtraction in arithmetic. By using ReLU, the forward and backward passes are much faster while retaining the non-linear nature of the activation function required for deep neural networks to be useful. The slope in the negative region can also be made into a parameter of each neuron, in this case, it is a Parametric ReLU introduced in , which take this idea further by making the coefficient of leakage into a parameter that is learned along with the other neural network parameters. However, the consistency of the benefit across tasks is presently unclear. In RReLU, the slopes of negative parts are randomized in a given range in the training, and then fixed in the testing. It is reported that RReLU could reduce overfitting due to its randomized nature in the National Data Science Bowl competition. This problem typically arises when the learning rate is set too high. One relatively popular choice is the that generalizes the ReLU and its leaky version. The Maxout neuron therefore enjoys all the benefits of a ReLU unit linear regime of operation, no saturation and does not have its drawbacks dying ReLU. However, unlike the ReLU neurons it doubles the number of parameters for every single neuron, leading to a high total number of parameters. In fact, it is the gradient-log-normalizer of the categorical probability distribution. Here is an example of Softmax application The softmax function is used in various multiclass classification methods, such as multinomial logistic regression, multiclass linear discriminant analysis, naive Bayes classifiers, and artificial neural networks. More details, see the link:. Other Activation Functions Like, , , , SoftSign, Exponential linear unit ELU , S-shaped rectified linear activation unit SReLU , Adaptive piecewise linear APL , Bent identity, SoftExponential, , , and so forth. See the Wikipedia link:. If this concerns you, give Leaky ReLU or Maxout a try.

Which is the right Activation Function. If you want to break into cutting-edge AI, this course will help you do so. This article discusses some of the choices. Do you have a favorite activation function. Notice that in both cases there are connections synapses between neurons across layers, but not within a layer. So the above courses and resources mentioned are some of my personal picks and my personal favorites. You can think of a tanh function as two sigmoids put together. The purpose of the activation function is to introduce non-linearity into the output of a neuron. Connections are the input of every neuron, and they are weighted, meaning that some connections are activation functions in neural networks important than others. That is a good point to consider when we are designing deep neural nets. Imagine a network with random initialized weights or normalised and almost 50% of the network yields 0 activation because of the characteristic of ReLu output 0 for negative values of x. Right: A plot from Krizhevsky et al.